VeasyGuide at ASSETS 2025 Recap and Presentation Recording 🎥

In the beautiful Denver, I had the privilege of presenting our work “VeasyGuide: Personalized Visual Guidance for Low-vision Learners on Instructor Actions in Presentation Videos” at ASSETS 2025 (International ACM SIGACCESS Conference on Computers and Accessibility). Even more exciting, VeasyGuide won a 🏆 best paper honorable mention award!

This presentation marked an important milestone in my research on making learning accessible for low-vision learners. Plus, this was my first time attending ASSETS! In this post I will share my experience at ASSETS, and around Denver during my time there.

Feel free to jump ahead to see the full recording of my presentation.

I didn’t know that VeasyGuide won an award. One morning, all of a sudden, I am getting “Congradulations!” messages from friends. Seems like when they go over the online program they saw it. But I, I was oblivious to it! So it was such a nice surprise to wake up one day and realize that we have gotten an award. But let’s get back on track—the conference!

This year the conference was held at the Curtis Hotel in Denver, Colorado. I’ve never been to Denver before, but I really liked that city. The downtown had a very nice vibe to it, and also some free public transportation! The shuttle that goes along the 16th street was free to use, which is so nice to see. I usually stay in hostels, which are not always near to the conference venue, so a freee shuttle but was a very nice surprise. The free shuttle goes from the big train station (Union) all the way down almost to the publuc library!

I took the opportunity to also visit the library. It is a very pleasant building, with a vintage kind of atmosphere. I sat down to do some work, at some random location, and as I am getting up to leave. I stumbled on a very interesting sign. And by interesting I mean, very relevant to me!

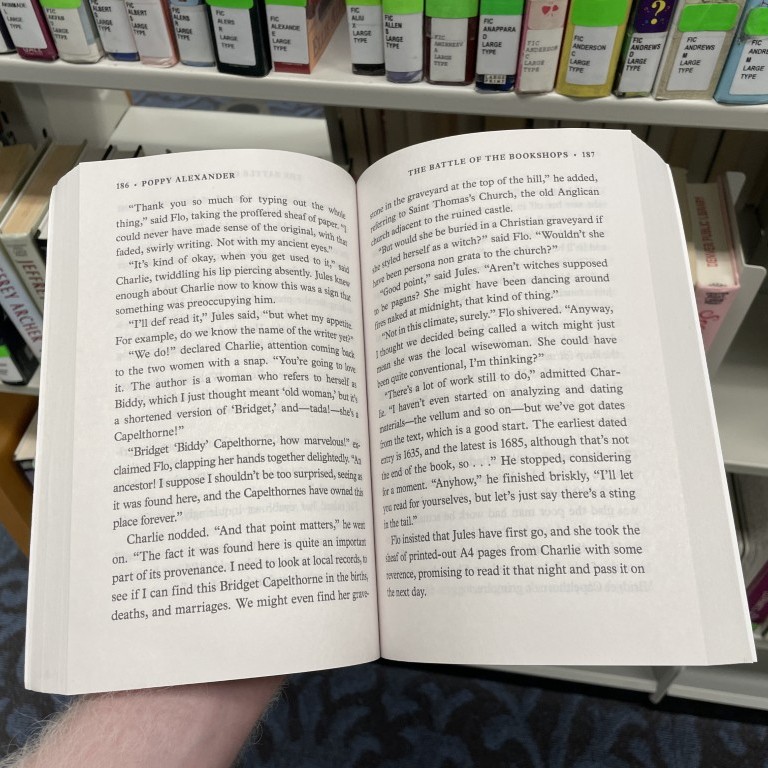

I was sitting right next to the large print area! What an interesting coicidence right? So I decided to look around through the books there, and I noticed something. The visual style of all books did not exactly match. Some books used a larger font and some smaller. I wonder if there are any regulations on large print books? I tried capturing some of the books I saw there. I think it’s not that easy to notice the side difference in pictures, but when I was there looking at these books, it was a much clearer difference.

This was my first time attending ASSETS, which has a long history since 1994. the conference is much smaller than CHI (which it sprung out of), and was single track until last year. This year though, it shifted to a dual track structure—split between two rooms. However, the entire conference took place on the same floor of the hotel, which was very comfortable. Since everyone is on the same floor, between both of the rooms, there was plenty of opportunities to chat and connect with other researchers and attendees.

It was so inspiring to see so many people interested in accessibility! Everyone is so passionate about their work and the impact they could have through their work; which is something I absolutely share. It was especially impactful to me to connect and chat with other researchers with disabilities. As a researcher with a disability myself, it was very important to see how many others pursue research despite everything! All throughout my life, almost everything is some kind of a struggle, but to me, this is what makes life so interesting, overcoming these difficulties—and I’m not the only one. In a nutshell, to me, ASSETS feels like home.

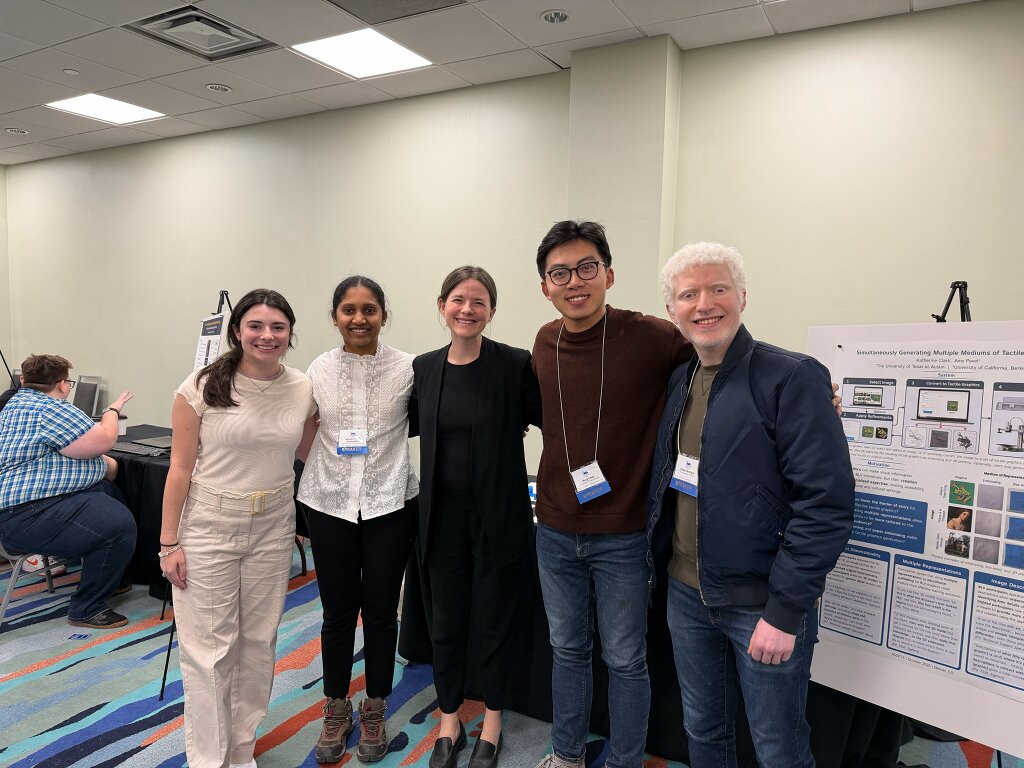

Another wonderful thing is that I was able to connect with past collegues from The University of Texas at Austin (UT Austin). I spent a summer there as a visiting researcher, and it was such a pleasure to be able to meet again and reunite! Especially, I am glad to see Prof. Amy Pavel, who hosted my stay at UT Austin there. Amy co-authored two papers with me for ASSETS 2025, VeasyGuide and Task Mode, which is a project by Ananya, another amazing researcher from UT Austin.

Before I move on to share my presentation experience, it’s important to mention that even though it was my first ASSETS, I was able to co-author another full paper (Task Mode) and a poster! The poster was lead by Veronica, who is an amazing researcher from The University of Michigen. It was a delight working on this topic with Veronica. People with ADHD are often left behind, with not much research conducted on ways to help, assist, or improve their daily life. This is especially true for professionals with ADHD, for instance, with managing online communication via email.

Now, about VeasyGuide! The presentation and interaction with the listeners was very impactful for me. I got so many wonderful questions during and after the talk! I truly appreciate everyone who watched, asked questions, and provided feedback on this work. Below I put the video recording of the talk and the transcriptions of the Q&A session. There are so many more things to do to improve online learning accessibility for low-vision learners!

I truly appreciate the enthusiasm and interest of people in VeasyGuide. We got some great questions during the session, and I am happy to share them here.

Q1: I’m interested in how you use computer vision to recognize the different actions. Were there any actions that were harder or easier to pick up?

So I can quickly go over the recognition. We divide the video into portions, less than one second long. And for each portion, we look at the front and last frame. Then we find the differences between them. Through that, we can detect contours of those differences. And over time, when we create these contours over time for many different, like, frame windows, we can basically create this graph that we connect based on proximity of space and time. So different contours can be connected based on those measurements. And that’s how we create edges.

We call this the region of change graph. And over time, we can use that and connected component analysis to detect those visual activities that stay consistent. So in our work, some of activities were a bit more difficult. For instance, fast cursor movements. With fast cursor movements, we detect this, but we might not be able to connect it as one activity because it covers a very wide range in the slide over a short period of time.

Also, we found that because of visual artifacts with compression, that while we do clean up some stuff, some visual artifacts were detected falsely as activities. So these are kind of some of the difficulties that we have with the pipeline. Thank you for your question.

Q2: So I think you mentioned that there’s customization functionalities for this tool. I think that’s great, especially given how much variation there is across people’s vision conditions and cognitive patterns. But I was wondering, like, how much did you notice people use that and is it different across individuals or even like within individuals?

So since the user study of VeasyGuide, of course, is time limited, we couldn’t test a lot of different long videos for the same users. But we did notice a lot of different usage in personalization. For instance, we found that for users with narrow field of vision, peripheral vision loss, they used one feature that we added through the co-design study, which is an animation. So when activity shows up, it can grow and shrink. And they used that and found it very helpful.

But for users with photosensitivity, for instance, this was not very useful and actually kind of harmful as well, because of the changes in brightness from the growing and shrinking of the highlight itself. And other users used color inversion, or changed the colors of the highlights and chose different visual annotations, like the pointer or the cursor indicator. So we found a lot of differences between users, and have more details in the paper.

Q3: You mentioned the eventual ability to be able to use your own videos for this. Is there a pre-processing step for the videos? Or is this being done essentially live as the video is broadcasting?

So we have a section in our paper that we detail how we make VeasyGuide available for live content as well. And there are several ways to do that. But right now, VeasyGuide relies on a processing stage. So in our plan, it would be doing the processing on the server side without retaining the video, and then having the processing available on the client side, which should not take too long.

I am looking forward to ASSETS 2026 in Portugal! ✨

References

- ASSETS

Enjoy Reading This Article?

Here are some more articles you might like to read next: